Databricks with spark partitioning

The one where I use a databricks notebook with spark and partitioned data.

The code for this project is available in GitHub - I’m using a branch for each part and merging each part into the latest branch. See the ReadMe.md in each branch for the story.

Introduction

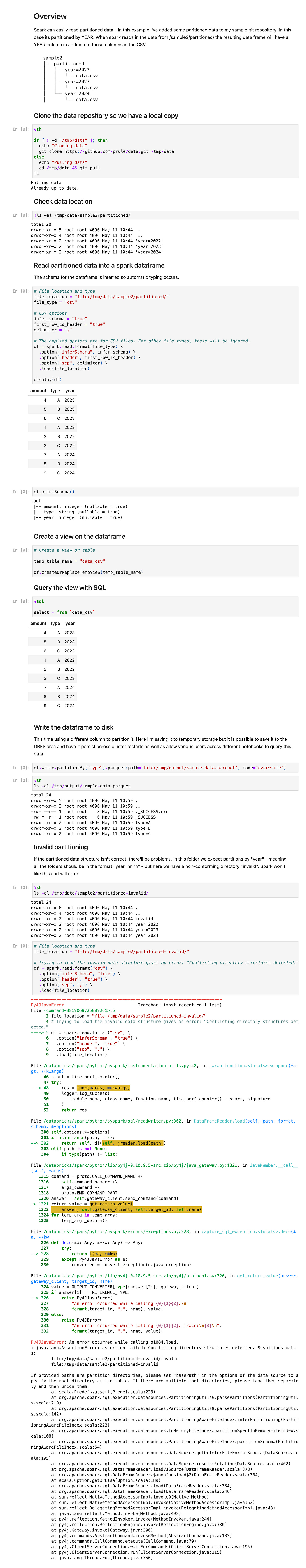

Today's exercise is using a DataBricks notebook to play with spark dataframes using partitioning - with big data this is useful to parallelize processing across a spark cluster.

A screen shot of the full notebook can be seen at the end of this article.

Details

I have a little git repository with some simple sample data. I clone this so I have a local copy:

git clone https://github.com/prule/data.git /tmp/datawhich looks like this:

sample2

├── partitioned

│ ├── year=2022

│ │ └── data.csv

│ ├── year=2023

│ │ └── data.csv

│ └── year=2024

│ └── data.csvThe year value isn't in the CSV files, but is provided by the directory name. When the dataframe is loaded the year value will be a column.

In a partitioned table, data are usually stored in different directories, with partitioning column values encoded in the path of each partition directory. All built-in file sources (including Text/CSV/JSON/ORC/Parquet) are able to discover and infer partitioning information automatically.

Now load it into a spark dataframe:

df = spark.read.format(file_type) \

.option("inferSchema", infer_schema) \

.option("header", first_row_is_header) \

.option("sep", delimiter) \

.load(file_location)The schema is inferred so columns are typed appropriately:

root

|-- amount: integer (nullable = true)

|-- type: string (nullable = true)

|-- year: integer (nullable = true)There's lots that can be done with the dataframe to transform the data now, but that's for another time.

Next though is to create a view from the dataframe:

df.createOrReplaceTempView("data_csv")which we can then query with SQL:

%sql

select * from `data_csv`Also, the dataframe can be written to disk, in this case partitioned by type instead of date (just as a example):

df.write.partitionBy("type").parquet(path='file:/tmp/output/sample-data.parquet', mode='overwrite')Which produces

sample-data.parquet

├── type=A

├── type=B

├── type=CNote that if your data isn't properly partitioned on disk the dataframe will fail to load.

So in this case it will fail since the "invalid" directory doesn't follow the expected "year=nnnn" format:

└── partitioned-invalid

├── invalid

│ └── data.csv

├── year=2022

│ └── data.csv

├── year=2023

│ └── data.csv

└── year=2024

└── data.csvSummary

Spark partitioning is a technique used to distribute data across multiple nodes in a Spark cluster. It enables parallel processing and improves performance by allowing multiple tasks to work on different partitions of the data simultaneously.

Here I've loaded some partitioned data into a spark dataframe, demonstrated how it can be queried or transformed and then persisted to disk.

I've exported the notebook as a ipynb file, so I can convert it to html:

pip install jupyter

jupyter nbconvert --to html Spark\ Partitioned\ Example.ipynbAnd now, as a simple HTML file it can easily be captured as a screenshot using the web developer tools available in Chrome, Safari, and Firefox.